The ability of mathematics to describe the behavior of nature, particularly in the field of physics, is a surprising fact, especially when one considers that mathematics is an abstract entity created by the human mind and disconnected from physical reality. But if mathematics is an entity created by humans, how is this precise correspondence possible?

Throughout centuries this has been a topic of debate, focusing on two opposing ideas: Is mathematics invented or discovered by humans?

This question has divided the scientific community: philosophers, physicists, logicians, cognitive scientists and linguists, and it can be said that not only is there no consensus, but generally positions are totally opposed. Mario Livio in the essay “Is God a Mathematician? [1] describes in a broad and precise way the historical events on the subject, from Greek philosophers to our days.

The aim of this post is to analyze this dilemma, introducing new analysis tools such as Information Theory (IT) [2], Algorithmic Information Theory (AIT) [3] and Computer Theory (CT) [4], without forgetting the perspective that shows the new knowledge about Artificial Intelligence (AI).

In this post we will make a brief review of the current state of the issue, without entering into its historical development, trying to identify the difficulties that hinder its resolution, for in subsequent posts to analyze the problem from a different perspective to the conventional, using the logical tools that offer us the above theories.

Currents of thought: invented or discovered?

In a very simplified way, it can be said that at present the position that mathematics is discovered by humans is headed by Max Tegmark, who states in “Our Mathematical Universe” [5] that the universe is a purely mathematical entity, which would justify that mathematics describes reality with precision, but that reality itself is a mathematical entity.

On the other extreme, there is a large group of scientists, including cognitive scientists and biologists who, based on the fact of the brain’s capabilities, maintain that mathematics is an entity invented by humans.

Max Tegmark: Our Mathematical Universe

In both cases, there are no arguments that would tip the balance towards one of the hypotheses. Thus, in Max Tegmark’s case he maintains that the definitive theory (Theory of Everything) cannot include concepts such as “subatomic particles”, “vibrating strings”, “space-time deformation” or other man-made constructs. Therefore, the only possible description of the cosmos implies only abstract concepts and relations between them, which for him constitute the operative definition of mathematics.

This reasoning assumes that the cosmos has a nature completely independent of human perception, and its behavior is governed exclusively by such abstract concepts. This view of the cosmos seems to be correct insofar as it eliminates any anthropic view of the universe, in which humans are only a part of it. However, it does not justify that physical laws and abstract mathematical concepts are the same entity.

In the case of those who maintain that mathematics is an entity invented by humans, the arguments do not usually have a formal structure and it could be said that in many cases they correspond more to a personal position and sentiment. An exception is the position maintained by biologists and cognitive scientists, in which the arguments are based on the creative capacity of the human brain and which would justify that mathematics is an entity created by humans.

For these, mathematics does not really differ from natural language, so mathematics would be no more than another language. Thus, the conception of mathematics would be nothing more than the idealization and abstraction of elements of the physical world. However, this approach presents several difficulties to be able to conclude that mathematics is an entity invented by humans.

On the one hand, it does not provide formal criteria for its demonstration. But it also presupposes that the ability to learn is an attribute exclusive to humans. This is a crucial point, which will be addressed in later posts. In addition, natural language is used as a central concept, without taking into account that any interaction, no matter what its nature, is carried out through language, as shown by the TC [4], which is a theory of language.

Consequently, it can be concluded that neither current of thought presents conclusive arguments about what the nature of mathematics is. For this reason, it seems necessary to analyze from new points of view what is the cause for this, since physical reality and mathematics seem intimately linked.

Mathematics as a discovered entity

In the case that considers mathematics the very essence of the cosmos, and therefore that mathematics is an entity discovered by humans, the argument is the equivalence of mathematical models with physical behavior. But for this argument to be conclusive, the Theory of Everything should be developed, in which the physical entities would be strictly of a mathematical nature. This means that reality would be supported by a set of axioms and the information describing the model, the state and the dynamics of the system.

This means a dematerialization of physics, something that somehow seems to be happening as the development of the deeper structures of physics proceeds. Thus, the particles of the standard model are nothing more than abstract entities with observable properties. This could be the key, and there is a hint in Landauer’s principle [6], which establishes an equivalence between information and energy.

But solving the problem by physical means or, to be more precise, by contrasting mathematical models with reality presents a fundamental difficulty. In general, mathematical models describe the functionality of a certain context or layer of reality, and all of them have a common characteristic, in such a way that these models are irreducible and disconnected from the underlying layers. Therefore, the deepest functional layer should be unraveled, which from the point of view of AIT and TC is a non-computable problem.

Mathematics as an invented entity

The current of opinion in favor of mathematics being an entity invented by humans is based on natural language and on the brain’s ability to learn, imagine and create.

But this argument has two fundamental weaknesses. On the one hand, it does not provide formal arguments to conclusively demonstrate the hypothesis that mathematics is an invented entity. On the other hand, it attributes properties to the human brain that are a general characteristic of the cosmos.

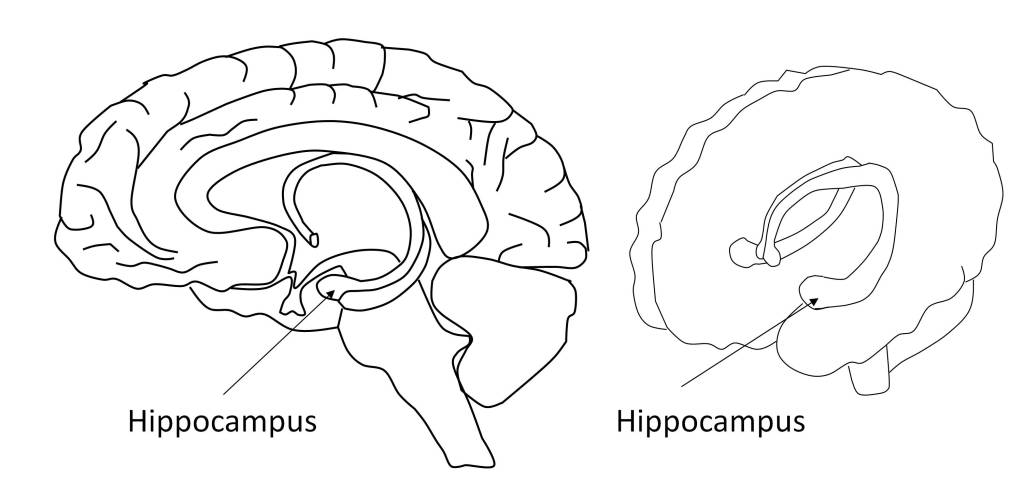

The Hippocampus: A paradigmatic example of the dilemma discovered or invented

To clarify this last point, let us take as an example the invention of whole numbers by humans, which is usually used to support this view. Let us now imagine an animal interacting with the environment. Therefore, it has to interpret spacetime accurately as a basic means of survival. Obviously, the animal must have learned or invented the space-time map, something much more complex than natural numbers.

Moreover, nature has provided or invented the hippocampus [7], a neuronal structure specialized in acquiring long-term information that forms a complex convolution, forming a recurrent neuronal network, very suitable for the treatment of the space-time map and for the resolution of trajectories. And of course this structure is physical and encoded in the genome of higher animals. The question is: Is this structure discovered or invented by nature?

Regarding the use of language as an argument, it should be noted that language is the means of interaction in nature at all functional levels. Thus, biology is a language, the interaction between particles is formally a language, although this point requires a deeper analysis for its justification. In particular, natural language is in fact a non-formal language, so it is not an axiomatic language, which makes it inconsistent.

Finally, in relation to the learning capability attributed to the brain, this is a fundamental characteristic of nature, as demonstrated by mathematical models of learning and evidenced in an incipient manner by AI.

Another way of approaching the question about the nature of mathematics is through Wigner’s enigma [8], in which he asks about the inexplicable effectiveness of mathematics. But this topic and the topics opened before will be dealt with and expanded in later posts.

References

| [1] | M. Livio, Is God a Mathematician?, New York: Simon & Schuster Paperbacks, 2009. |

| [2] | C. E. Shannon, «A Mathematical Theory of Communication,» The Bell System Technical Journal, vol. 27, pp. 379-423, 1948. |

| [3] | P. Günwald and P. Vitányi, “Shannon Information and Kolmogorov Complexity,” arXiv:cs/0410002v1 [cs:IT], 2008. |

| [4] | M. Sipser, Introduction to the Theory of Computation, Course Technology, 2012. |

| [5] | M. Tegmark, Our Mathematical Universe: My Quest For The Ultimate Nature Of Reality, Knopf Doubleday Publishing Group, 2014. |

| [6] | R. Landauer, «Irreversibility and Heat Generation in Computing Process,» IBM J. Res. Dev., vol. 5, pp. 183-191, 1961. |

| [7] | S. Jacobson y E. M. Marcus, Neuroanatomy for the Neuroscientist, Springer, 2008. |

| [8] | E. P. Wigner, «The unreasonable effectiveness of mathematics in the natural sciences.,» Communications on Pure and Applied Mathematics, vol. 13, nº 1, pp. 1-14, 1960. |