The self-awareness of human beings, which constitutes the concept of consciousness, has been and continues to be an enigma faced by philosophers, anthropologists and neuroscientists. But perhaps most suggestive is the fact that consciousness is a central concept in human behavior and that being aware of it does not find an explanation for it.

Without going into details, until the modern age the concept of consciousness had deep roots in the concept of soul and religious beliefs, often attributing to divine intervention in the differentiation of human nature from other species.

The modern age saw a substantial change based on Descartes’ concept “cogito ergo sum“ (“ I think, therefore I am”) and later on the model proposed by Kant, which is structured around what are known as “transcendental arguments” [1].

Subsequently, a variety of schools of thought have developed, among which dualistic, monistic, materialistic and neurocognitive theories stand out. In general terms, these theories focus on the psychological and phenomenological aspects that describe conscious reality. In the case of neurocognitive theories, neurological evidence is a fundamental pillar. But ultimately, all these theories are abstract in nature and, for the time being, have failed to provide a formal justification of consciousness and how a “being” can develop conscious behavior, as well as concepts such as morality or ethics.

One aspect that these models deal with and that brings into question the concept of the “cogito” is the change of behavior produced by brain damage and that in some cases can be re-educated, which shows that the brain and the learning processes play a fundamental role in consciousness.

In this regard, advances in Artificial Intelligence (AI) [2] highlight the formal foundations of learning, by which an algorithm can acquire knowledge and in which neural networks are now a fundamental component. For this reason, the use of this new knowledge can shed light on the nature of consciousness.

The Turing Test paradigm

To analyze what may be the mechanisms that support consciousness we can start with the Turing Test [3], in which a machine is tested to see if it shows a behavior similar to that of a human being.

Without going into the definition of the Turing Test, we can assimilate this concept to that of a chatbot, as shown in Figure 1, which can give us an intuitive idea of this concept. But we can go even further if we consider its implementation. This requires the availability of a huge amount of dialogues between humans, which allows us to train the model using Deep Learning techniques [4]. And although it may seem strange, the availability of dialogues is the most laborious part of the process.

Figure 1. Schematic of the Turing Test

Once the chatbot has been trained, we can ask about its behavior from a psychophysical point of view. The answer seems quite obvious, since although it can show a very complex behavior, this will always be a reflex behavior, even though the interlocutor can deduce that the chatbot has feelings and even an intelligent behavior. The latter is a controversial issue because of the difficulty of defining what constitutes intelligent behavior, which is highlighted by the questions: Intelligent? Compared to what?

But the Turing Test only aims to determine the ability of a machine to show human-like behavior, without going into the analysis of the mechanisms to establish this functionality.

In the case of humans, these mechanisms can be classified into two sections: genetic learning and neural learning.

Genetic learning

Genetic learning is based on the learning capacity of biology to establish functions adapted to the processing of the surrounding reality. Expressed in this way it does not seem an obvious or convincing argument, but DNA computing [5] is a formal demonstration of the capability of biological learning. The evolution of capabilities acquired through this process is based on trial and error, which is inherent to learning. Thus, biological evolution is a slow process, as nature shows.

Instinctive reactions are based on genetic learning, so that all species of living beings are endowed with certain faculties without the need for significant subsequent training. Examples are the survival instinct, the reproductive instinct, and the maternal and paternal instinct. These functions are located in the inner layers of the brain, which humans share with vertebrates.

We will not go into details related to neuroscience [6], since the only thing that interests us in this analysis is to highlight two fundamental aspects: the functional specialization and plasticity of each of its neural structures. Thus, structure, plasticity and specialization are determined by genetic factors, so that the inner layers, such as the limbic system, have a very specialized functionality and require little training to be functional. In contrast, the external structures, located in the neocortex, are very plastic and their functionality is strongly influenced by learning and experience.

Thus, genetic learning is responsible for structure, plasticity and specialization, whereas neural learning is intimately linked to the plastic functionality of neural tissue.

A clear example of functional specialization based on genetic learning is the space-time processing that we share with the rest of higher living beings and that is located in the limbic system. This endows the brain with structures dedicated to the establishment of a spatial map and the processing of temporal delay, which provides the ability to establish trajectories in advance, vital for survival and for interacting with spatio-temporal reality.

This functionality has a high degree of automaticity, which makes its functional capacity effective from the moment of birth. However, this is not exactly the case in humans, since these neural systems function in coordination with the neocortex, which requires a high degree of neural training.

Thus, for example, this functional specialization precludes visualizing and intuitively understanding geometries of more than three spatial dimensions, something that humans can only deal with abstractly at a higher level by means of the neocortex, which has a plastic functionality and is the main support for neural learning.

It is interesting to consider that the functionality of the neocortex, whose response time is longer than that of the lower layers, can interfere in the reaction of automatic functions. This is clearly evident in the loss of concentration in activities that require a high degree of automatism, as occurs in certain sports activities. This means that in addition to having an appropriate physical capacity and a well-developed and trained automatic processing capacity, elite athletes require specific psychological preparation.

This applies to all sensory systems, such as vision, hearing, balance, in which genetic learning determines and conditions the interpretation of information coming from the sensory organs. But as this information ascends to the higher layers of the brain, the processing and interpretation of the information is determined by neural learning.

This is what differentiates humans from the rest of the species, being endowed with a highly developed neocortex, which provides a very significant neural learning capacity, from which the conscious being seems to emerge.

Nevertheless, there is solid evidence of the ability to feel and to have a certain level of consciousness in some species. This is what has triggered a movement for legal recognition of feelings in certain species of animals, and even recognition of personal status for some species of hominids.

Neural learning: AI as a source of intuition

Currently, AI is made up of a set of mathematical strategies that are grouped under different names depending on their characteristics. Thus, Machine Learning (ML) is made up of classical mathematical algorithms, such as statistical algorithms, decision trees, clustering, support vector machine, etc. Deep Learning, on the other hand, is inspired by the functioning of neural tissue, and exhibits complex behavior that approximates certain capabilities of humans.

In the current state of development of this discipline, designs are reduced to the implementation and training of specific tasks, such as automatic diagnostic systems, assistants, chatbots, games, etc., so these systems are grouped in what is called Artificial Narrow Intelligence.

The perspective offered by this new knowledge makes it possible to establish three major categories within AI:

- Artificial Narrow Intelligence.

- Artificial General Intelligence. AI systems with a capacity similar to that of human beings.

- Artificial Super Intelligence: Self-aware AI systems with a capacity equal to or greater than that of human beings.

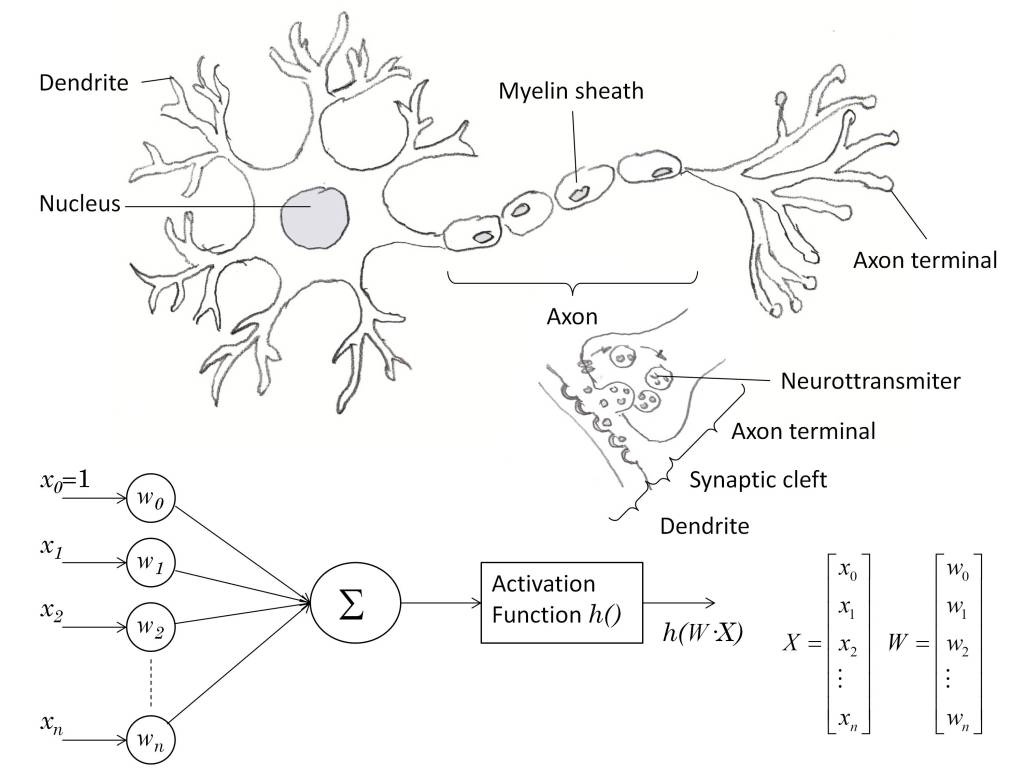

The implementation of neural networks used in Deep Learning is inspired by the functionality of neurons and neural tissue, as shown in Figure 2 [7]. As a consequence, the nerve stimuli coming from the axon terminals that connect to the dendrites (synapses) are weighted and processed according to the functional configuration of the neuron acquired by learning, producing a nerve stimulus that propagates to other neurons, through the terminal axons.

Figure 2. Structure of a neuron and mathematical model

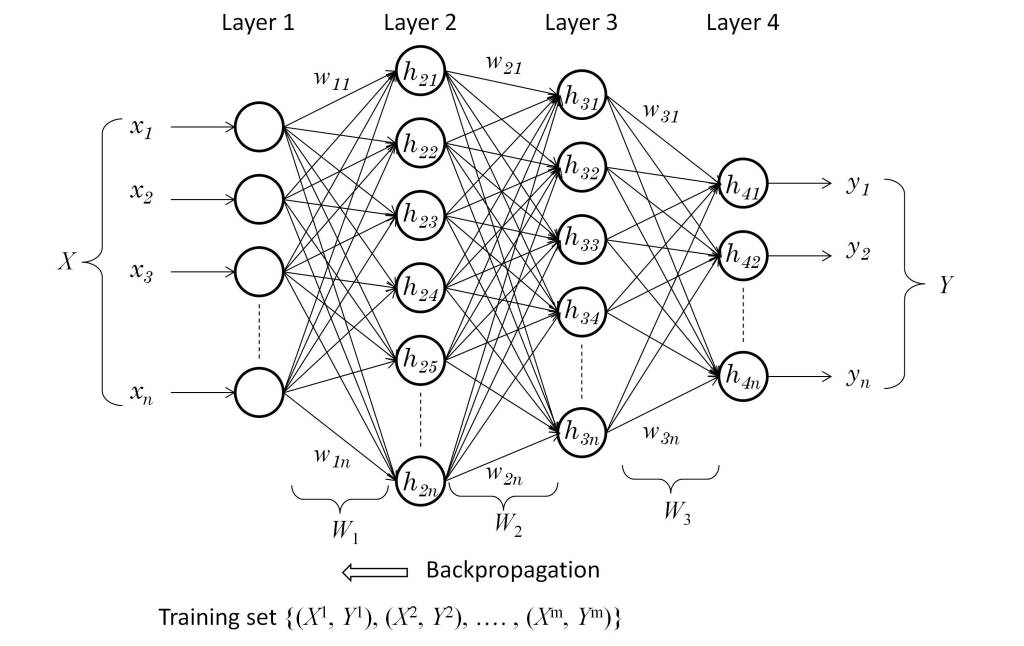

Artificial neural networks are structured by creating layers of the mathematical neuron model, as shown in Figure 3. A fundamental issue in this model is to determine the mechanisms necessary to establish the weighting parameters Wi in each of the units that form the neural network. Neural mechanisms could be used for this purpose. However, although there is a very general idea of how the functionality of the synapses is configured, the establishment of the functionality at the neural network level is still a mystery.

Figure 3. Artificial Neural Network Architecture

In the case of artificial neural networks, mathematics has found a solution that makes it possible to establish the Wi values, by means of what is known as supervised learning. This requires having a dataset in which each of its elements represents a stimulus X i and the response to this stimulus Y i. Thus, once the Wi values have been randomly initialized, the training phase proceeds, presenting each of the X i stimuli and comparing the response with the Y i values. The errors produced are propagated backwards by means of an algorithm known as backpropagation.

Through the sequential application of the elements of a training set belonging to the dataset in several sessions, a state of convergence is reached, in which the neural network achieves an appropriate degree of accuracy, verified by means of a validation set of elements belonging to the dataset that are not used for training.

An example is much more intuitive to understand the nature of the elements of a dataset. Thus, in a dataset used in the training of autonomous driving systems, X i correspond to images in which patterns of different types of vehicles, pedestrians, public roads, etc. appear. Each of these images has a category Y i associated with it, which specifies the patterns that appear in that image. It should be noted that in the current state of development of AI systems, the dataset is made by humans, so learning is supervised and requires significant resources.

In unsupervised learning the category Y i is generated automatically, although its state of development is very incipient. A very illustrative example is the Alpha Zero program developed by DeepMind [8], in such a way that learning is performed by providing it with the rules of the game (chess, go, shogi) and developing against itself matches, in such a way that the moves and the result configure (X i , Y i). The neural network is continuously updated with these results, sequentially improving its behavior and therefore the new results (X i , Y i), reaching a superhuman level of play.

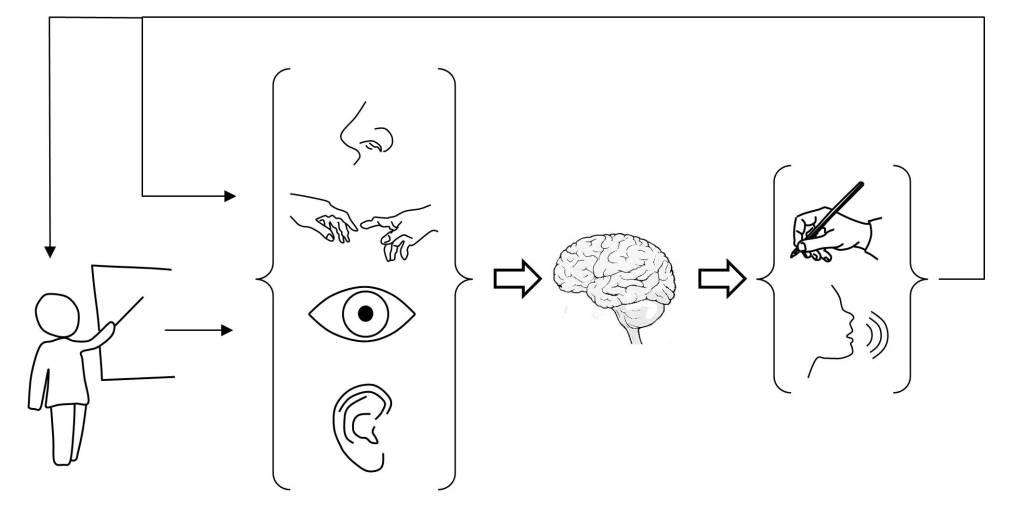

It is important to note that in the case of upper living beings, unsupervised learning takes place through the interaction of the afferent (sensory) neuronal system and the efferent (motor) neuronal system. Although from a functional point of view there are no substantial differences, this interaction takes place at two levels, as shown in Figure 4:

- The interaction with the inanimate environment.

- Interaction with other living beings, especially of the same species.

The first level of interaction provides knowledge about physical reality. On the other contrary, the second level of interaction allows the establishment of survival habits and, above all, social habits. In the case of humans, this level acquires great importance and complexity, since from it emerge concepts such as morality and ethics, as well as the capacity to accumulate and transmit knowledge from generation to generation.

Figure 4. Structure of unsupervised learning

Consequently, unsupervised learning is based on the recursion of afferent and efferent systems. This means that unlike the models used in Deep Learning, which are unidirectional, unsupervised AI systems require the implementation of two independent systems. An afferent system that produces a response from a stimulus and an efferent system that, based on the response, corrects the behavior of the afferent system by means of a reinforcement technique.

What is the foundation of consciousness?

Two fundamental aspects can be deduced from the development of AI:

- The learning capability of algorithms.

- The need for afferent and efferent structures to support unsupervised learning.

On the other hand, it is known that traumatic processes in the brain or pathologies associated with aging can produce changes in personality and conscious perception. This clearly indicates that these functions are located in the brain and supported by neural tissue.

But it is necessary to rely on anthropology to have a more precise idea of what are the foundations of consciousness and how it has developed in human beings. Thus, a direct correlation can be observed between the cranial capacity of a hominid species and its abilities, social organization, spirituality and, above all, in the abstract perception of the surrounding world. This correlation is clearly determined by the size of the neocortex and can be observed to a lesser extent in other species, such as primates, showing a capacity for emotional pain, a structured social organization and a certain degree of abstract learning.

According to all of the above, it could be concluded that consciousness emerges from the learning capacity of the neural tissue and would be achieved as the structural complexity and functional resources of the brain acquire an appropriate level of development. But this leads directly to the scenario proposed by the Turing Test, in such a way that we would obtain a system with a complex behavior indistinguishable from a human, which does not provide any proof of the existence of consciousness.

To understand this, we can ask how a human comes to the conclusion that all other humans are self-awareness. In reality, it has no argument to reach this conclusion, since at most it could check that they verify the Turing test. The human comes to the conclusion that other humans have consciousness by resemblance to itself. By introspection, a human is self-awareness and since the rest of the humans are similar to him it concludes that the rest of the humans are self-awareness.

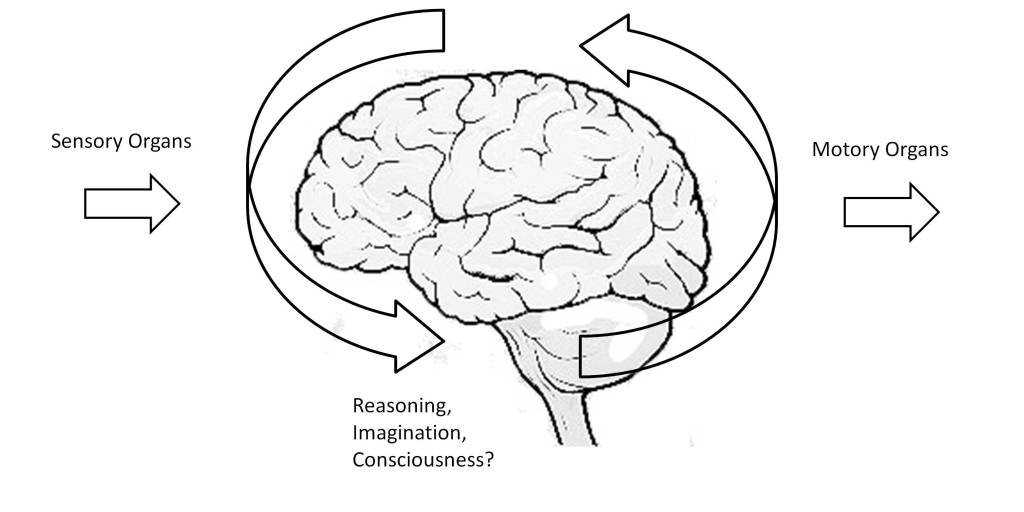

Ultimately, the only answer that can be given to what is the basis of consciousness is the introspection mechanism of the brain itself. In the unsupervised learning scheme, the afferent and efferent mechanisms that allow the brain to interact with the outside world through the sensory and motor organs have been highlighted. However, to this model we must add another flow of information, as shown in Figure 5, which enhances learning and corresponds to the interconnection of neuronal structures of the brain that recursively establish the mechanisms of reasoning, imagination and, why not, consciousness.

Figure 5. Mechanism of reasoning and imagination.

This statement may seem radical, but if we meditate on it we will see that the only difference between imagination and consciousness is that the capacity of humans to identify themselves raises existential questions that are difficult to answer, but which from the point of view of information processing require the same resources as reasoning or imagination.

But how can this hypothesis be verified? One possible solution would be to build a system based on learning technologies that would confirm the hypothesis, but would this confirmation be accepted as true, or would it simply be decided that the system verifies the Turing Test?

| [1] | Stanford Encyclopedia of Philosophy, «Kant’s View of the Mind and Consciousness of Self,» 2020 Oct 8. [On line]. Available: https://plato.stanford.edu/entries/kant-mind/. [Last access: 2021 Jun 6]. |

| [2] | S. J. Russell y P. Norvig, Artificial Intelligence: A Modern Approach, Pearson, 2021. |

| [3] | A. Turing, «Computing Machinery and Intelligence,» Mind, vol. LIX, nº 236, p. 433–60, 1950. |

| [4] | C. C. Aggarwal, Neural Networks and Deep Learning, Springer, 2018. |

| [5] | L. M. Adleman, «Molecular computation of solutions to combinatorial problems,» Science, vol. 266, nº 5187, pp. 1021-1024, 1994. |

| [6] | E. R. Kandel, J. D. Koester, S. H. Mack y S. A. Siegelbaum, Principles of Neural Science, Macgraw Hill, 2021. |

| [7] | F. Rosenblatt, «The Perceptron: A Probabilistic Model for Information Storage and Organization in the Brain,» Psychological Review, vol. 65, nº 6, pp. 386-408, 1958. |

| [8] | D. Silver, T. Hubert y J. Schrittwieser, «DeepMind,» [On line]. Available: https://deepmind.com/blog/article/alphazero-shedding-new-light-grand-games-chess-shogi-and-go. [Last access: 2021 Jun 6]. |